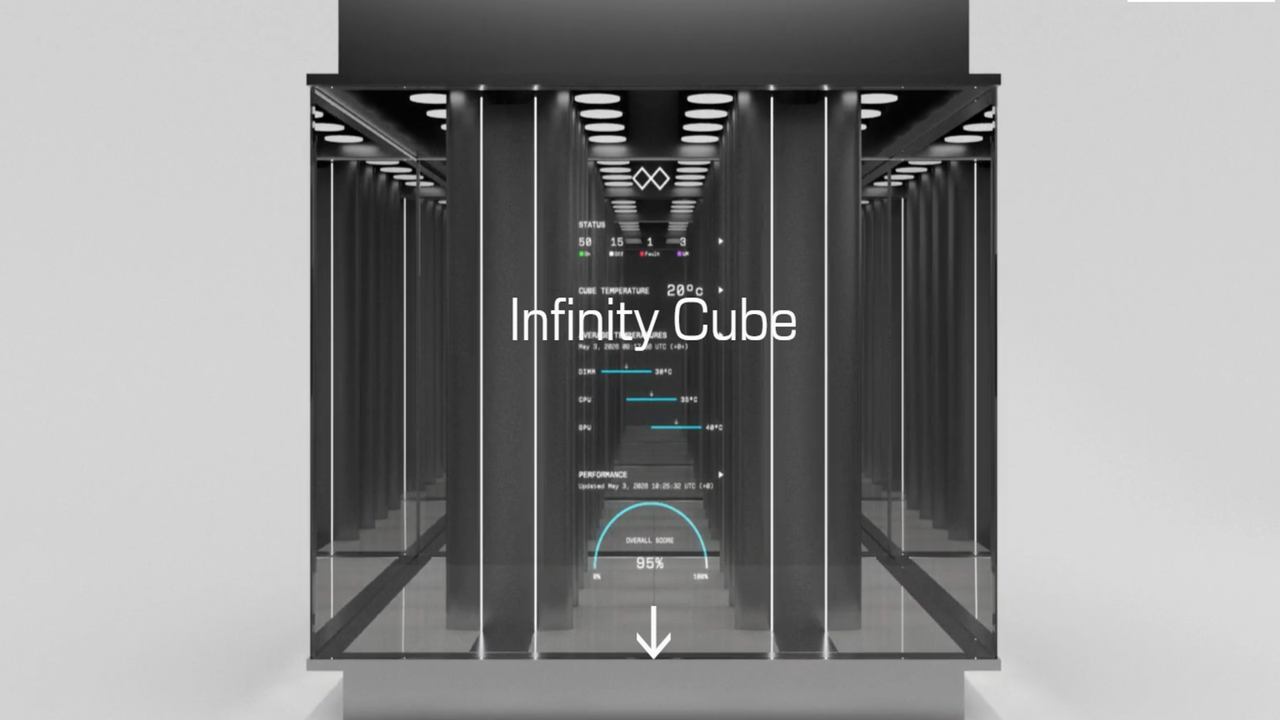

- Odinn Infinity Cube combines multiple Omnia supercomputers into a single glass enclosure

- Memory capacity reaches 86TB of DDR5 ECC registered RAM

- NVMe storage in the cube totals a whopping 27.5PB

Odinn, a California-based startup, has introduced the Infinity Cube as an attempt to compress data center class computing into a visually contained structure.

At CES 2026, the company unveiled the Odinn Omnia, a movable AI supercomputer, although a system of that scale alone would face clear throughput limitations, and this is where the Cube comes in.

The Infinity Cube is a 14ft x 14ft AI cluster capable of assembling multiple Omnia AI supercomputers in one glass enclosure.

Scaling AI with modular clustering

This device emphasizes extreme component density rather than incremental efficiency improvements.

According to Odinn, a fully customizable core specification allows the Cube to scale up to 56 AMD EPYC 9845 processors, aggregating 8960 CPU cores.

Its GPU capacity extends to 224 Nvidia HGX B200 units, paired with 43TB of combined VRAM.

For storage, the device supports up to 86TB of DDR5 ECC registered RAM, while NVMe storage capacity reaches 27.5PB.

These figures imply substantial internal interconnect and power distribution demands that the company has not detailed publicly.

The device uses liquid cooling, with each Omnia unit managing its own thermal requirements without shared external infrastructure.

This design avoids reliance on raised floors or centralized cooling plants, at least in theory.

The Infinity Cube relies on a proprietary software layer called NeuroEdge to coordinate workloads across the cluster.

The software integrates with Nvidia’s AI software ecosystem and common frameworks, handling scheduling and deployment automatically.

This abstraction aims to reduce the need for manual tuning, although it also places operational dependency on Odinn’s software maturity.

Institutions that already rely on cloud infrastructure for AI workloads may question whether local orchestration simplifies administration under real world conditions.

The company states that the Infinity Cube suits organizations with strict privacy, safety, or latency requirements that discourage cloud reliance.

Placing infrastructure closer to workloads can reduce network delays, but it also shifts responsibility for uptime, maintenance, and lifecycle management back to the owner.

The idea of presenting data center hardware within compact glass enclosures may appeal aesthetically.

However, the practical tradeoffs between density, accessibility, and resilience remain unresolved without real world deployment evidence.

TechRadar will be extensively covering this year's CES, and will bring you all of the big announcements as they happen. Head over to our CES 2026 news page for the latest stories and our hands-on verdicts on everything from wireless TVs and foldable displays to new phones, laptops, smart home gadgets, and the latest in AI. You can also ask us a question about the show in our CES 2026 live Q&A and we’ll do our best to answer it.

And don’t forget to follow us on TikTok and WhatsApp for the latest from the CES show floor!